ELK-Stack

Description

Thank you for your comprehension.

Prerequisites

First install Java. It is recommended to install Oracle Java:

$ sudo mkdir /usr/java/ $ wget `curl --silent http://www.java.com/en/download/linux_manual.jsp | grep "Download Java software for Linux" | grep -v RPM | grep x64 | sort -u | cut -d "\"" -f4` -O /tmp/java-x64.tar.gz $ sudo tar xzf /tmp/java-x64.tar.gz -C /usr/java --strip-components=1

Elasticsearch

Installation

Download and install:

$ wget -qO - https://packages.elastic.co/GPG-KEY-elasticsearch | sudo apt-key add - $ echo "deb http://packages.elastic.co/elasticsearch/2.x/debian stable main" | sudo tee -a /etc/apt/sources.list.d/elasticsearch-2.x.list $ sudo apt-get update && sudo apt-get install elasticsearch

Restrict access to your elasticsearch instance (port 9200) to only localhost;

$ sudo echo "network.host: localhost" >> /etc/elasticsearch/elasticsearch.yml

Add the path to JAVA_HOME in /etc/default/elasticsearch:

$ sed -i '1 i\JAVA_HOME=/usr/java' /etc/default/elasticsearch

Register the autostart script and start elasticsearch:

$ sudo /bin/systemctl daemon-reload $ sudo /bin/systemctl enable elasticsearch.service $ sudo systemctl start elasticsearch.service

Check that service is started:

$ systemctl status elasticsearch.service

Check your version:

$ curl -XGET http://localhost:9200

{

"name" : "Crooked Man",

"cluster_name" : "elasticsearch",

"version" : {

"number" : "2.2.0",

"build_hash" : "8ff36d139e16f8720f2947ef62c8167a888992fe",

"build_timestamp" : "2016-01-27T13:32:39Z",

"build_snapshot" : false,

"lucene_version" : "5.4.1"

},

"tagline" : "You Know, for Search"

}

Plugins

Watcher

Install:

$ cd /usr/share/elasticsearch/ $ sudo bin/plugin install elasticsearch/license/latest $ sudo bin/plugin install elasticsearch/watcher/latest -> Installing elasticsearch/watcher/latest... Trying https://download.elastic.co/elasticsearch/watcher/watcher-latest.zip ... Downloading ..............................................................................................DONE Verifying https://download.elastic.co/elasticsearch/watcher/watcher-latest.zip checksums if available ... Downloading .DONE @@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@ @ WARNING: plugin requires additional permissions @ @@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@ * java.lang.RuntimePermission getClassLoader * java.lang.RuntimePermission setContextClassLoader * java.lang.RuntimePermission setFactory See http://docs.oracle.com/javase/8/docs/technotes/guides/security/permissions.html for descriptions of what these permissions allow and the associated risks. Continue with installation? [y/N]y Installed watcher into /usr/share/elasticsearch/plugins/watcher

Restart elasticsearch:

$ sudo systemctl restart elasticsearch.service

Check install:

$ curl -XGET 'http://localhost:9200/_watcher/stats?pretty' { "watcher_state" : "started", "watch_count" : 0, "execution_thread_pool" : { "queue_size" : 0, "max_size" : 0 }, "manually_stopped" : false }

Logstash

Installation

Install:

$ wget -qO - https://packages.elastic.co/GPG-KEY-elasticsearch | sudo apt-key add - $ echo "deb http://packages.elastic.co/logstash/2.2/debian stable main" | sudo tee -a /etc/apt/sources.list $ sudo apt-get update && sudo apt-get install logstash

It seems that Logstash is unable to locate java when installed manually. All below methods failed for me:

- set JAVA_HOME in ~/.bashrc

- add JAVA_HOME/bin to PATH environment variable

- add JAVA_HOME=/usr/java in /etc/default/logstash

- update JAVACMD=/usr/java/bin/java in /etc/default/logstash

The only trick that worked for me:

$ sudo ln -s /usr/java/bin/java /usr/bin/

Start:

$ sudo systemctl daemon-reload $ sudo systemctl enable logstash.service $ sudo systemctl start logstash.service

And ensure that it is running.

$ systemctl status logstash.service

Index configuration files

Example 1: squid access.log

input {

file {

type => "squid"

path => [ "/var/log/squid/access.log" ]

}

}

filter {

if [type] == "squid" {

grok {

match => [ "message", "%{NUMBER:timestamp}\s+%{NUMBER:request_msec:int} %{IPORHOST:src_ip} %{WORD:cache_result}/%{NUMBER:response_status:int} %{NUMBER:response_size:int} %{WORD:http_method} (%{WORD:http_proto}://)?%{IPORHOST:dst_host}(?::%{POSINT:port})?(?:%{URIPATHPARAM:uri_param})? %{USERNAME:cache_user} %{WORD:request_route}/(%{IPORHOST:forwarded_to}|-) %{GREEDYDATA:content_type}" ]

add_tag => ["squid"]

}

date {

match => [ "timestamp", "UNIX" ]

remove_field => [ "timestamp" ]

}

}

}

output {

elasticsearch { hosts => localhost }

stdout { codec => rubydebug }

}

Example 2: suricate eve.json

input {

file {

path => ["/var/log/suricata/eve.json"]

sincedb_path => ["/var/lib/logstash/suricata.db"]

codec => json

type => "SuricataIDPS"

}

}

filter {

if [type] == "SuricataIDPS" {

date {

match => [ "timestamp", "ISO8601" ]

}

ruby {

code => "if event['event_type'] == 'fileinfo'; event['fileinfo']['type']=event['fileinfo']['magic'].to_s.split(',')[0]; end;"

}

}

if [src_ip] {

geoip {

source => "src_ip"

target => "geoip"

database => "/opt/logstash/vendor/geoip/GeoLiteCity.dat"

add_field => [ "[geoip][coordinates]", "%{[geoip][longitude]}" ]

add_field => [ "[geoip][coordinates]", "%{[geoip][latitude]}" ]

}

mutate {

convert => [ "[geoip][coordinates]", "float" ]

}

if ![geoip.ip] {

if [dest_ip] {

geoip {

source => "dest_ip"

target => "geoip"

database => "/opt/logstash/vendor/geoip/GeoLiteCity.dat"

add_field => [ "[geoip][coordinates]", "%{[geoip][longitude]}" ]

add_field => [ "[geoip][coordinates]", "%{[geoip][latitude]}" ]

}

mutate {

convert => [ "[geoip][coordinates]", "float" ]

}

}

}

}

}

output {

elasticsearch {

hosts => localhost

#protocol => http

}

}

Grok patterns

Grok patterns are regular expressions developped by elasticsearch as helpers. The full list of grok filters is available here: https://raw.githubusercontent.com/elastic/logstash/v1.4.2/patterns/grok-patterns. Below is a copy on 2016-03-26:

USERNAME [a-zA-Z0-9._-]+

USER %{USERNAME}

INT (?:[+-]?(?:[0-9]+))

BASE10NUM (?<![0-9.+-])(?>[+-]?(?:(?:[0-9]+(?:\.[0-9]+)?)|(?:\.[0-9]+)))

NUMBER (?:%{BASE10NUM})

BASE16NUM (?<![0-9A-Fa-f])(?:[+-]?(?:0x)?(?:[0-9A-Fa-f]+))

BASE16FLOAT \b(?<![0-9A-Fa-f.])(?:[+-]?(?:0x)?(?:(?:[0-9A-Fa-f]+(?:\.[0-9A-Fa-f]*)?)|(?:\.[0-9A-Fa-f]+)))\b

POSINT \b(?:[1-9][0-9]*)\b

NONNEGINT \b(?:[0-9]+)\b

WORD \b\w+\b

NOTSPACE \S+

SPACE \s*

DATA .*?

GREEDYDATA .*

QUOTEDSTRING (?>(?<!\\)(?>"(?>\\.|[^\\"]+)+"|""|(?>'(?>\\.|[^\\']+)+')|''|(?>`(?>\\.|[^\\`]+)+`)|``))

UUID [A-Fa-f0-9]{8}-(?:[A-Fa-f0-9]{4}-){3}[A-Fa-f0-9]{12}

# Networking

MAC (?:%{CISCOMAC}|%{WINDOWSMAC}|%{COMMONMAC})

CISCOMAC (?:(?:[A-Fa-f0-9]{4}\.){2}[A-Fa-f0-9]{4})

WINDOWSMAC (?:(?:[A-Fa-f0-9]{2}-){5}[A-Fa-f0-9]{2})

COMMONMAC (?:(?:[A-Fa-f0-9]{2}:){5}[A-Fa-f0-9]{2})

IPV6 ((([0-9A-Fa-f]{1,4}:){7}([0-9A-Fa-f]{1,4}|:))|(([0-9A-Fa-f]{1,4}:){6}(:[0-9A-Fa-f]{1,4}|((25[0-5]|2[0-4]\d|1\d\d|[1-9]?\d)(\.(25[0-5]|2[0-4]\d|1\d\d|[1-9]?\d)){3})|:))|(([0-9A-Fa-f]{1,4}:){5}(((:[0-9A-Fa-f]{1,4}){1,2})|:((25[0-5]|2[0-4]\d|1\d\d|[1-9]?\d)(\.(25[0-5]|2[0-4]\d|1\d\d|[1-9]?\d)){3})|:))|(([0-9A-Fa-f]{1,4}:){4}(((:[0-9A-Fa-f]{1,4}){1,3})|((:[0-9A-Fa-f]{1,4})?:((25[0-5]|2[0-4]\d|1\d\d|[1-9]?\d)(\.(25[0-5]|2[0-4]\d|1\d\d|[1-9]?\d)){3}))|:))|(([0-9A-Fa-f]{1,4}:){3}(((:[0-9A-Fa-f]{1,4}){1,4})|((:[0-9A-Fa-f]{1,4}){0,2}:((25[0-5]|2[0-4]\d|1\d\d|[1-9]?\d)(\.(25[0-5]|2[0-4]\d|1\d\d|[1-9]?\d)){3}))|:))|(([0-9A-Fa-f]{1,4}:){2}(((:[0-9A-Fa-f]{1,4}){1,5})|((:[0-9A-Fa-f]{1,4}){0,3}:((25[0-5]|2[0-4]\d|1\d\d|[1-9]?\d)(\.(25[0-5]|2[0-4]\d|1\d\d|[1-9]?\d)){3}))|:))|(([0-9A-Fa-f]{1,4}:){1}(((:[0-9A-Fa-f]{1,4}){1,6})|((:[0-9A-Fa-f]{1,4}){0,4}:((25[0-5]|2[0-4]\d|1\d\d|[1-9]?\d)(\.(25[0-5]|2[0-4]\d|1\d\d|[1-9]?\d)){3}))|:))|(:(((:[0-9A-Fa-f]{1,4}){1,7})|((:[0-9A-Fa-f]{1,4}){0,5}:((25[0-5]|2[0-4]\d|1\d\d|[1-9]?\d)(\.(25[0-5]|2[0-4]\d|1\d\d|[1-9]?\d)){3}))|:)))(%.+)?

IPV4 (?<![0-9])(?:(?:25[0-5]|2[0-4][0-9]|[0-1]?[0-9]{1,2})[.](?:25[0-5]|2[0-4][0-9]|[0-1]?[0-9]{1,2})[.](?:25[0-5]|2[0-4][0-9]|[0-1]?[0-9]{1,2})[.](?:25[0-5]|2[0-4][0-9]|[0-1]?[0-9]{1,2}))(?![0-9])

IP (?:%{IPV6}|%{IPV4})

HOSTNAME \b(?:[0-9A-Za-z][0-9A-Za-z-]{0,62})(?:\.(?:[0-9A-Za-z][0-9A-Za-z-]{0,62}))*(\.?|\b)

HOST %{HOSTNAME}

IPORHOST (?:%{HOSTNAME}|%{IP})

HOSTPORT %{IPORHOST}:%{POSINT}

# paths

PATH (?:%{UNIXPATH}|%{WINPATH})

UNIXPATH (?>/(?>[\w_%!$@:.,-]+|\\.)*)+

TTY (?:/dev/(pts|tty([pq])?)(\w+)?/?(?:[0-9]+))

WINPATH (?>[A-Za-z]+:|\\)(?:\\[^\\?*]*)+

URIPROTO [A-Za-z]+(\+[A-Za-z+]+)?

URIHOST %{IPORHOST}(?::%{POSINT:port})?

# uripath comes loosely from RFC1738, but mostly from what Firefox

# doesn't turn into %XX

URIPATH (?:/[A-Za-z0-9$.+!*'(){},~:;=@#%_\-]*)+

#URIPARAM \?(?:[A-Za-z0-9]+(?:=(?:[^&]*))?(?:&(?:[A-Za-z0-9]+(?:=(?:[^&]*))?)?)*)?

URIPARAM \?[A-Za-z0-9$.+!*'|(){},~@#%&/=:;_?\-\[\]]*

URIPATHPARAM %{URIPATH}(?:%{URIPARAM})?

URI %{URIPROTO}://(?:%{USER}(?::[^@]*)?@)?(?:%{URIHOST})?(?:%{URIPATHPARAM})?

# Months: January, Feb, 3, 03, 12, December

MONTH \b(?:Jan(?:uary)?|Feb(?:ruary)?|Mar(?:ch)?|Apr(?:il)?|May|Jun(?:e)?|Jul(?:y)?|Aug(?:ust)?|Sep(?:tember)?|Oct(?:ober)?|Nov(?:ember)?|Dec(?:ember)?)\b

MONTHNUM (?:0?[1-9]|1[0-2])

MONTHNUM2 (?:0[1-9]|1[0-2])

MONTHDAY (?:(?:0[1-9])|(?:[12][0-9])|(?:3[01])|[1-9])

# Days: Monday, Tue, Thu, etc...

DAY (?:Mon(?:day)?|Tue(?:sday)?|Wed(?:nesday)?|Thu(?:rsday)?|Fri(?:day)?|Sat(?:urday)?|Sun(?:day)?)

# Years?

YEAR (?>\d\d){1,2}

HOUR (?:2[0123]|[01]?[0-9])

MINUTE (?:[0-5][0-9])

# '60' is a leap second in most time standards and thus is valid.

SECOND (?:(?:[0-5]?[0-9]|60)(?:[:.,][0-9]+)?)

TIME (?!<[0-9])%{HOUR}:%{MINUTE}(?::%{SECOND})(?![0-9])

# datestamp is YYYY/MM/DD-HH:MM:SS.UUUU (or something like it)

DATE_US %{MONTHNUM}[/-]%{MONTHDAY}[/-]%{YEAR}

DATE_EU %{MONTHDAY}[./-]%{MONTHNUM}[./-]%{YEAR}

ISO8601_TIMEZONE (?:Z|[+-]%{HOUR}(?::?%{MINUTE}))

ISO8601_SECOND (?:%{SECOND}|60)

TIMESTAMP_ISO8601 %{YEAR}-%{MONTHNUM}-%{MONTHDAY}[T ]%{HOUR}:?%{MINUTE}(?::?%{SECOND})?%{ISO8601_TIMEZONE}?

DATE %{DATE_US}|%{DATE_EU}

DATESTAMP %{DATE}[- ]%{TIME}

TZ (?:[PMCE][SD]T|UTC)

DATESTAMP_RFC822 %{DAY} %{MONTH} %{MONTHDAY} %{YEAR} %{TIME} %{TZ}

DATESTAMP_RFC2822 %{DAY}, %{MONTHDAY} %{MONTH} %{YEAR} %{TIME} %{ISO8601_TIMEZONE}

DATESTAMP_OTHER %{DAY} %{MONTH} %{MONTHDAY} %{TIME} %{TZ} %{YEAR}

DATESTAMP_EVENTLOG %{YEAR}%{MONTHNUM2}%{MONTHDAY}%{HOUR}%{MINUTE}%{SECOND}

# Syslog Dates: Month Day HH:MM:SS

SYSLOGTIMESTAMP %{MONTH} +%{MONTHDAY} %{TIME}

PROG (?:[\w._/%-]+)

SYSLOGPROG %{PROG:program}(?:\[%{POSINT:pid}\])?

SYSLOGHOST %{IPORHOST}

SYSLOGFACILITY <%{NONNEGINT:facility}.%{NONNEGINT:priority}>

HTTPDATE %{MONTHDAY}/%{MONTH}/%{YEAR}:%{TIME} %{INT}

# Shortcuts

QS %{QUOTEDSTRING}

# Log formats

SYSLOGBASE %{SYSLOGTIMESTAMP:timestamp} (?:%{SYSLOGFACILITY} )?%{SYSLOGHOST:logsource} %{SYSLOGPROG}:

COMMONAPACHELOG %{IPORHOST:clientip} %{USER:ident} %{USER:auth} \[%{HTTPDATE:timestamp}\] "(?:%{WORD:verb} %{NOTSPACE:request}(?: HTTP/%{NUMBER:httpversion})?|%{DATA:rawrequest})" %{NUMBER:response} (?:%{NUMBER:bytes}|-)

COMBINEDAPACHELOG %{COMMONAPACHELOG} %{QS:referrer} %{QS:agent}

# Log Levels

LOGLEVEL ([Aa]lert|ALERT|[Tt]race|TRACE|[Dd]ebug|DEBUG|[Nn]otice|NOTICE|[Ii]nfo|INFO|[Ww]arn?(?:ing)?|WARN?(?:ING)?|[Ee]rr?(?:or)?|ERR?(?:OR)?|[Cc]rit?(?:ical)?|CRIT?(?:ICAL)?|[Ff]atal|FATAL|[Ss]evere|SEVERE|EMERG(?:ENCY)?|[Ee]merg(?:ency)?)

Plugins

geoip

To install geoip:

$ sudo mkdir /opt/logstash/vendor/geoip/ $ sudo cd /opt/logstash/vendor/geoip/ $ sudo curl -O "http://geolite.maxmind.com/download/geoip/database/GeoLiteCity.dat.gz" $ sudo gunzip GeoLiteCity.dat.gz

Note that the GeoLite databases are updated by MaxMind on the first Tuesday of each month. Therefore, if you want to always have the latest database, you should set up a cron job that will download the database once a month.

$ sudo crontab -e

Add followin content:

# m h dom mon dow command 0 0 10 * * wget -O - http://geolite.maxmind.com/download/geoip/database/GeoLiteCity.dat.gz | gunzip -c -f > /opt/logstash/vendor/geoip/GeoLiteCity.dat

Restart cron:

$ sudo systemctl restart cron.service

Kibana

Installation

At the time of this writing, you have to install Kibana from sources:

$ cd /opt/ $ wget -qO - https://download.elastic.co/kibana/kibana/kibana-4.4.2-linux-x64.tar.gz | sudo tar -xzf - $ sudo useradd kibana $ sudo chown -R kibana:kibana /opt/kibana-4.4.2-linux-x64/

Now, create the following systemd autostart script:

$ cat /usr/lib/systemd/system/kibana.service [Unit] Description=kibana Documentation=http://www.elastic.co Wants=network-online.target After=network-online.target [Service] User=kibana Group=kibana ExecStart=/opt/kibana-4.4.2-linux-x64/bin/kibana Restart=always StandardOutput=null # Connects standard error to journal StandardError=journal [Install] WantedBy=multi-user.target

Register and start the service:

$ sudo systemctl daemon-reload $ sudo systemctl enable kibana.service $ sudo systemctl start kibana.service

Make sure it has started:

$ systemctl status kibana.service

Optional: nginx

I recommend to install nginx as reverse proxy to serve Kibana HTTP resources.

If you wish to do this configuration, modify kibana configuration file so that it will only accept localhost connections.

# echo "server.host: \"localhost\"" >> /opt/kibana-4.4.1-linux-x64/config/kibana.yml $ sudo aptitude update && sudo aptitude install nginx apache2-utils

Protect access (replace <user> with the desired username):

$ sudo htpasswd -c /etc/nginx/htpasswd.users <user>

And create the following file:

$ cat /etc/nginx/sites-available/default

server {

listen 80;

server_name localhost.local;

auth_basic "Restricted Access";

auth_basic_user_file /etc/nginx/htpasswd.users;

location / {

proxy_pass http://localhost:5601;

proxy_http_version 1.1;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection 'upgrade';

proxy_set_header Host $host;

proxy_cache_bypass $http_upgrade;

}

}

Now restart nginx:

$ sudo service nginx restart

Now, instead of accessing the frontend with http://<server>5601, access it with http://<server>.

Tests & troubleshooting

Tests

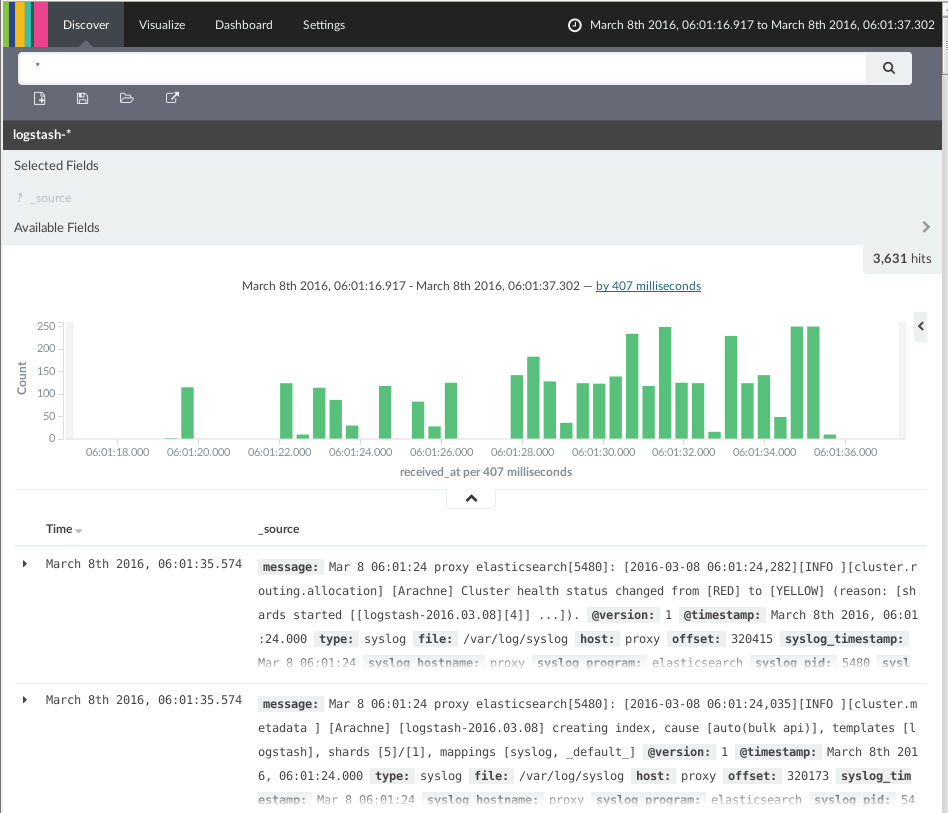

Go to Discover (modify clock on top right corner if needed) and you should be able to see data as follows:

Troubleshooting

You can use the following command to test a logstash configuration file:

$ /opt/logstash/bin/logstash agent --configtest --config /etc/logstash/conf.d/syslog.conf Configuration OK