Skipfish

Jump to navigation

Jump to search

SkipFish

Description

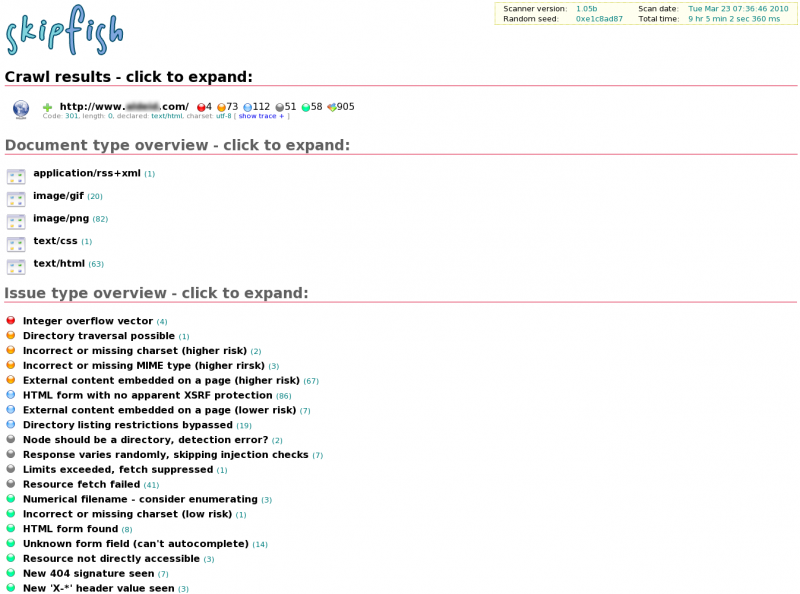

SkipFish has been developed by Michal Zalewski (Google engineer). This tool automatizes vulnerability assessment on web applications. It is capable of processing between 500 requests per second for a remote scan and 7,000 requests per second for a local one. The tool is compatible with Linux, FreeBSD 7.0+, MacOS X, and Windows (Cygwin) environments

Installation

Prerequisites

# apt-get install libidn11-dev

Skipfish

# mkdir -p /usr/local/skipfish/ # cd /usr/local/skipfish/ # wget http://skipfish.googlecode.com/files/skipfish-1.10b.tgz # tar xvf skipfish-1.10b.tgz # cd skipfish/ # make

To check that it has been successfully installed, type:

# ./skipfish -h

Usage

Basic usage

# ./skipfish -W dictionaries/complete.wl -o report http://www.domain.com/index.php

Options

Authentication and access options

-A user:pass - use specified HTTP authentication credentials -F host:IP - pretend that 'host' resolves to 'IP' -C name=val - append a custom cookie to all requests -H name=val - append a custom HTTP header to all requests -b (i|f) - use headers consistent with MSIE / Firefox -N - do not accept any new cookies

Crawl scope options

-d max_depth - maximum crawl tree depth (16) -c max_child - maximum children to index per node (1024) -r r_limit - max total number of requests to send (100000000) -p crawl% - node and link crawl probability (100%) -q hex - repeat probabilistic scan with given seed -I string - only follow URLs matching 'string' -X string - exclude URLs matching 'string' -S string - exclude pages containing 'string' -D domain - crawl cross-site links to another domain -B domain - trust, but do not crawl, another domain -O - do not submit any forms -P - do not parse HTML, etc, to find new links

Reporting options

-o dir - write output to specified directory (required) -J - be less noisy about MIME / charset mismatches -M - log warnings about mixed content -E - log all HTTP/1.0 / HTTP/1.1 caching intent mismatches -U - log all external URLs and e-mails seen -Q - completely suppress duplicate nodes in reports

Dictionary management options

-W wordlist - load an alternative wordlist (skipfish.wl) -L - do not auto-learn new keywords for the site -V - do not update wordlist based on scan results -Y - do not fuzz extensions in directory brute-force -R age - purge words hit more than 'age' scans ago -T name=val - add new form auto-fill rule -G max_guess - maximum number of keyword guesses to keep (256)

Performance settings

-g max_conn - max simultaneous TCP connections, global (50) -m host_conn - max simultaneous connections, per target IP (10) -f max_fail - max number of consecutive HTTP errors (100) -t req_tmout - total request response timeout (20 s) -w rw_tmout - individual network I/O timeout (10 s) -i idle_tmout - timeout on idle HTTP connections (10 s) -s s_limit - response size limit (200000 B)